GGUF Loader

🎉 NEW: Agentic Mode Now Available! Transform your local AI into an autonomous coding assistant that can read, create, edit, and organize files in your workspace.

GGUF Loader is a privacy-first desktop platform for running local LLMs with advanced agentic capabilities, floating tools, and a powerful plugin system — giving you instant AI anywhere on your screen, all running offline on your machine.

The problem:

Running open-source LLMs locally is powerful but painful. It's either command-line only or scattered across multiple tools. There's no GUI that brings it together no ecosystem, no UX, no quick way to make LLMs useful in daily tasks. Even worse, most local LLMs just chat they can't autonomously read your files, write code, or automate development tasks. You're stuck manually doing everything yourself.

The solution:

GGUF Loader gives users a beautiful desktop interface, one-click model loading, and a plugin system inspired by Blender. But it goes further: with autonomous agentic mode, your AI can now read, create, edit, and organize files automatically. Generate entire project structures, refactor code, create tests, and automate workflows all using fully offline models. Plus, with its built-in floating button, you can summon AI from anywhere on your screen. It's a privacy-first productivity layer that turns LLMs into true autonomous agents you can drag, click, and extend with plugins.

✍️ 2. Product Vision (2 Paragraphs) The problem: Running open-source LLMs locally is powerful but frustrating. Users face messy installs, scattered tools, CLI-only interfaces, and no way to extend functionality without code. Even power users lack a smooth workflow to manage models, and critically, there's no autonomous agent that can actually DO things — read files, write code, or automate development tasks using their own machine.

The solution: GGUF Loader turns your PC into a local AI platform with autonomous agentic capabilities. With a modern GUI, one-click model loading, and a Blender-style plugin system, you now have an AI agent that can read, create, edit, and organize files automatically. Generate code, automate refactoring, build project structures, add summarizers, floating agents, RAG tools, and more — all running offline. Whether you're a developer, researcher, or AI tinkerer, GGUF Loader gives you a stable, extensible foundation for intelligent tools that respect your privacy and run 24/7 without cloud lock-in.

🎯 Mission Statement

We believe AI shouldn’t live in the cloud — it should live on your screen, always-on, fully yours. GGUF Loader is building the interface layer for the local LLM revolution: a plugin-based platform with floating assistants, developer extensibility, and a vision to empower millions with intelligent local tools.

📥 Download GGUF Loader

Option 1: Download ZIP (Recommended - Works on All Platforms)

⬇️ Download GGUF Loader ZIP (~5 MB)

How to Run:

- Download the ZIP file above

- Extract the ZIP file to any folder on your computer

- Run the launcher:

- Windows: Double-click

launch.bat - Linux/macOS: Double-click

launch.sh(or runchmod +x launch.sh && ./launch.shin terminal)

- Windows: Double-click

- First time only: Wait 1-2 minutes while dependencies install automatically

- Done! The app will start. Next time just double-click the launcher again - starts instantly!

Option 2: Windows Executable (No Installation Required)

⬇️ Download GGUFLoader_v2.1.1.agentic_mode.exe (~150-300 MB)

Just download and double-click to run! No Python, no dependencies, no setup needed.

Option 3: Install via pip

pip install ggufloader

ggufloader

📂 Links

- GitHub: https://github.com/GGUFloader/gguf-loader

- Website: https://ggufloader.github.io

- PyPI: https://pypi.org/project/ggufloader/

🚀 What's New in v2.1.1 (February 2026)

🤖 Agentic Mode - Autonomous AI Assistant

Transform your local AI into an autonomous coding assistant that can:

- 📖 Read Files - Analyze code, documentation, and project structure

- ✍️ Create Files - Generate new source files, configs, and documentation

- ✏️ Edit Files - Modify existing code and update configurations

- 📁 Organize Files - Create folders, move files, and restructure projects

- ⚡ Automate Tasks - Execute multi-step workflows without manual intervention

🎯 Key Features

- Toggle Agent Mode - Enable/disable agentic capabilities with a single checkbox

- Workspace Selector - Choose your project folder with an intuitive file browser

- Real-time Status - Visual indicators show agent state (Ready, Processing, Error)

- Tool Execution - Agent can use various tools to accomplish complex tasks

- Multi-step Reasoning - Break down complex problems into manageable steps

- 100% Private - All processing happens locally on your machine

🧩 Floating Assistant Button

A persistent, system-wide AI helper that hovers over your screen — summon AI from anywhere to summarize, reply, translate, or search — all using fully offline models.

🔌 Add-on System (Blender-style Plugins)

Build your own AI tools inside GGUF Loader! Addons live directly in the chat UI with toggle switches — think PDF summarizers, spreadsheet bots, email assistants, and more.

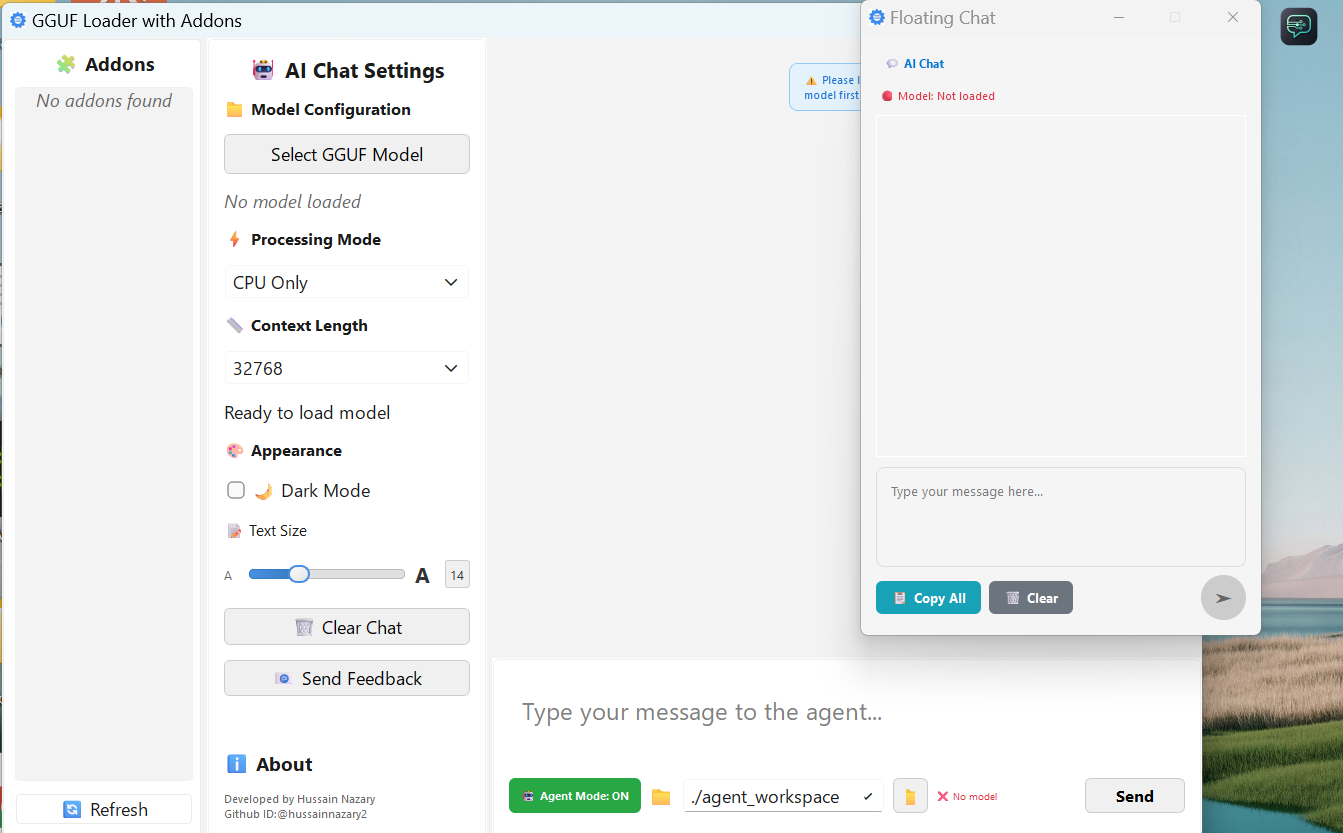

📸 Screenshot

Modern GUI with agentic mode, floating assistant, and plugin system — all running locally on your machine.

🔖 Model Card

This “model” repository hosts the Model Card and optional demo Space for GGUF Loader, a desktop application that loads, manages, and chats with GGUF‑format large language models entirely offline.

📝 Description

GGUF Loader with its floating button is light weight software that lets you easily run advanced AI language models (LLMs) like Mistral, LLaMA, and DeepSeek on Windows, macOS, and Linux. It has a drag-and-drop graphical interface, so loading models is quick and easy.

- ✨ GUI‑First: No terminal commands; point‑and‑click interface

- 🔌 Plugin System: Extend with addons (PDF summarizer, email assistant, spreadsheet automator…)

- ⚡️ Lightweight: Runs on machines as modest as Intel i5 + 16 GB RAM

- 🔒 Offline & Private: All inference happens locally—no cloud calls

🎯 Intended Uses

- Autonomous Development: Let AI generate code, create project structures, and automate refactoring

- Local AI Prototyping: Experiment with open GGUF models without API costs

- Privacy‑Focused Workflows: Chat and automate tasks privately on your own machine

- Code Generation: Generate boilerplate code, unit tests, and documentation

- File Automation: Automate file organization, configuration updates, and project restructuring

- Plugin Workflows: Build custom data‑processing addons (e.g. summarization, code assistant)

⚠️ Limitations

- No cloud integration: Purely local, no access to OpenAI or Hugging Face inference APIs

- Workspace access: Agentic mode requires explicit workspace folder selection for file operations

- Model dependent: Agentic capabilities work best with instruction-tuned models (Mistral-7B recommended)

- Requires Python 3.8+ for pip installation (not needed for Windows .exe)

📚 Citation

If you use GGUF Loader in your research or project, please cite:

@misc{ggufloader2026,

title = {GGUF Loader: Agentic AI Platform with GUI & Plugin System for Local LLMs},

author = {Hussain Nazary},

year = {2026},

howpublished = {\url{https://github.com/GGUFloader/gguf-loader}},

note = {Version 2.1.1, PyPI: ggufloader}

}

- No cloud integration: Purely local, no access to OpenAI or Hugging Face inference APIs

- GUI only: No headless server/CLI‑only mode (coming soon)

- Requires Python 3.8+ and dependencies (

llama-cpp-python,PySide6)

🚀 How to Use

1. Install

pip install ggufloader

2. Launch GUI

ggufloader

3. Load Your Model

- Drag & drop your

.ggufmodel file into the window - Select plugin(s) from the sidebar (e.g. “Summarize PDF”)

- Start chatting!

4. Python API

from ggufloader import chat

# Ensure you have a GGUF model in ./models/mistral.gguf

chat("Hello offline world!", model_path="./models/mistral.gguf")

📦 Features

| Feature | Description |

|---|---|

| GUI for GGUF LLMs | Point‑and‑click model loading & chatting |

| Plugin Addons | Summarization, code helper, email reply, more |

| Cross‑Platform | Windows, macOS, Linux |

| Multi‑Model Support | Mistral, LLaMA, DeepSeek, Yi, Gemma, OpenHermes |

| Memory‑Efficient | Designed to run on 16 GB RAM or higher |

💡 Comparison

| Tool | GUI | Agentic Mode | Plugins | Pip Install | Offline | Notes |

|---|---|---|---|---|---|---|

| GGUF Loader | ✅ | ✅ | ✅ | ✅ | ✅ | Autonomous agent, extensible UI |

| LM Studio | ✅ | ❌ | ❌ | ❌ | ✅ | Polished, but no automation |

| Ollama | ❌ | ❌ | ❌ | ❌ | ✅ | CLI‑first, narrow use case |

| GPT4All | ✅ | ❌ | ❌ | ✅ | ✅ | Limited extensibility |

🔗 Demo Space

Try a static demo or minimal Gradio embed (no live inference) here:

https://huggingface.co/spaces/Hussain2050/gguf-loader-demo

📚 Citation

If you use GGUF Loader in your research or project, please cite:

@misc{ggufloader2026,

title = {GGUF Loader: Agentic AI Platform with GUI & Plugin System for Local LLMs},

author = {Hussain Nazary},

year = {2026},

howpublished = {\url{https://github.com/GGUFloader/gguf-loader}},

note = {Version 2.1.1, PyPI: ggufloader}

}

💻 System Requirements

Minimum Requirements

- OS: Windows 10/11, Linux (Ubuntu 20.04+), or macOS 10.15+

- RAM: 8 GB (for Q4_0 models)

- Storage: 2 GB free space + model size (4-50 GB depending on model)

- CPU: Intel i5 or equivalent (any modern processor)

Recommended Requirements

- RAM: 16 GB or more

- GPU: NVIDIA GPU with CUDA support (optional, for faster inference)

- Storage: SSD for better performance

Model Size Guide

- 7B models (Q4_0): ~4-5 GB, needs 8 GB RAM

- 7B models (Q6_K): ~6-7 GB, needs 12 GB RAM

- 20B models (Q4_K): ~7-8 GB, needs 16 GB RAM

- 120B models (Q4_K): ~46 GB, needs 64 GB RAM

🔽 Download GGUF Models

⚡ Click a link below to download the model file directly (no Hugging Face page in between).

🧠 GPT-OSS Models (Open Source GPTs)

High-quality, Apache 2.0 licensed, reasoning-focused models for local/enterprise use.

🧠 GPT-OSS 120B (Dense)

🧠 GPT-OSS 20B (Dense)

🧠 Mistral-7B Instruct ⭐ Recommended for Agentic Mode

- ⬇️ Download Q4_K_M (4.37 GB) - BEST for agentic workflows ⭐

- ⬇️ Download Q4_0 (4.23 GB) - Faster, slightly lower quality

- ⬇️ Download Q6_K (6.23 GB) - Higher quality, needs more RAM

Q4_K_M offers the perfect balance of quality and speed for code generation and multi-step problem solving

Qwen 1.5-7B Chat

DeepSeek 7B Chat

LLaMA 3 8B Instruct

🗂️ More Model Collections

🗂️ More Model Collections

🤝 Contributing

We welcome contributions! See CONTRIBUTING.md for guidelines.

🐛 Support & Issues

- Report Issues: GitHub Issues

- Discussions: GitHub Discussions

- Email: hossainnazary475@gmail.com

⚖️ License

This project is licensed under the MIT License. See LICENSE for details.

Built with ❤️ by the GGUF Loader community

Last updated: February 7, 2026