Commit

·

c176aea

0

Parent(s):

ic

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- A6 - 1.png +0 -0

- Isp.png +0 -0

- PINN/__init__.py +0 -0

- PINN/__pycache__/__init__.cpython-310.pyc +0 -0

- PINN/__pycache__/pinns.cpython-310.pyc +0 -0

- PINN/pinns.py +53 -0

- README.md +13 -0

- TDP.png +0 -0

- TPU.png +0 -0

- Tisp.png +0 -0

- Unknown-3.jpg +0 -0

- ann.png +0 -0

- app.py +83 -0

- bb.md +38 -0

- dH.png +0 -0

- dT.png +0 -0

- dashboard.png +0 -0

- data/bound.pkl +0 -0

- data/dataset.csv +24 -0

- data/dataset.pkl +0 -0

- data/new +0 -0

- data/test.pkl +0 -0

- disc.png +0 -0

- docs/.DS_Store +0 -0

- docs/main.html +106 -0

- fig1.png +0 -0

- gan.png +0 -0

- gen.png +0 -0

- geom.png +0 -0

- graph.jpg +0 -0

- intro.md +453 -0

- invariant.png +0 -0

- maT.png +0 -0

- main.md +1060 -0

- main.py +83 -0

- model.png +0 -0

- models/model.onnx +0 -0

- module_name.md +456 -0

- nets/__init__.py +0 -0

- nets/__pycache__/HET_dense.cpython-310.pyc +0 -0

- nets/__pycache__/__init__.cpython-310.pyc +0 -0

- nets/__pycache__/deep_dense.cpython-310.pyc +0 -0

- nets/__pycache__/dense.cpython-310.pyc +0 -0

- nets/__pycache__/design.cpython-310.pyc +0 -0

- nets/__pycache__/envs.cpython-310.pyc +0 -0

- nets/deep_dense.py +32 -0

- nets/dense.py +27 -0

- nets/design.py +42 -0

- nets/envs.py +491 -0

- nets/opti/__init__.py +0 -0

A6 - 1.png

ADDED

|

Isp.png

ADDED

|

PINN/__init__.py

ADDED

|

File without changes

|

PINN/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (140 Bytes). View file

|

|

|

PINN/__pycache__/pinns.cpython-310.pyc

ADDED

|

Binary file (1.76 kB). View file

|

|

|

PINN/pinns.py

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from torch import nn,tensor

|

| 2 |

+

import numpy as np

|

| 3 |

+

import seaborn as sns

|

| 4 |

+

class PINNd_p(nn.Module):

|

| 5 |

+

""" $d \mapsto P$

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

"""

|

| 9 |

+

def __init__(self):

|

| 10 |

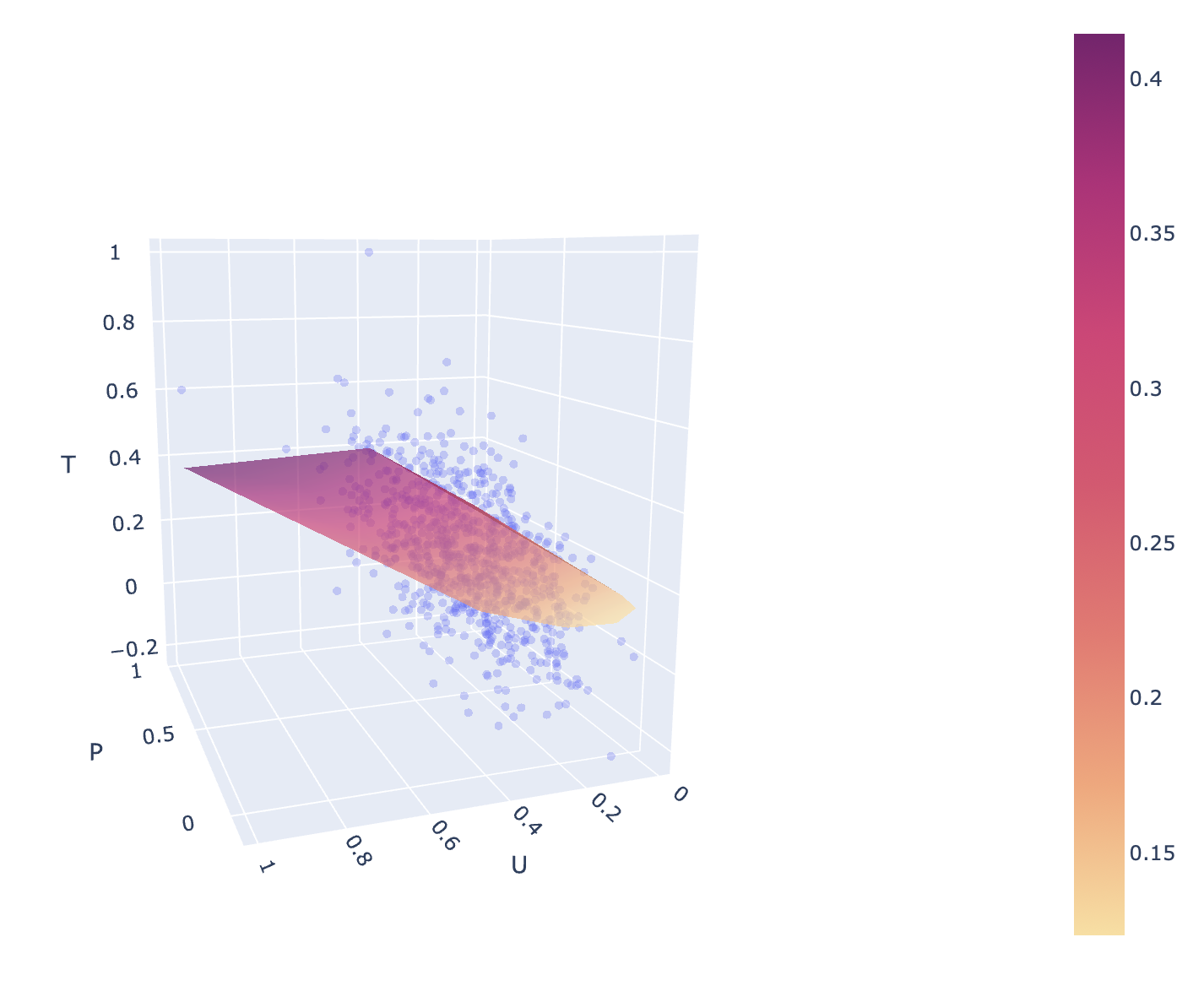

+

super(PINNd_p,self).__init__()

|

| 11 |

+

weights = tensor([60.,0.5])

|

| 12 |

+

self.weights = nn.Parameter(weights)

|

| 13 |

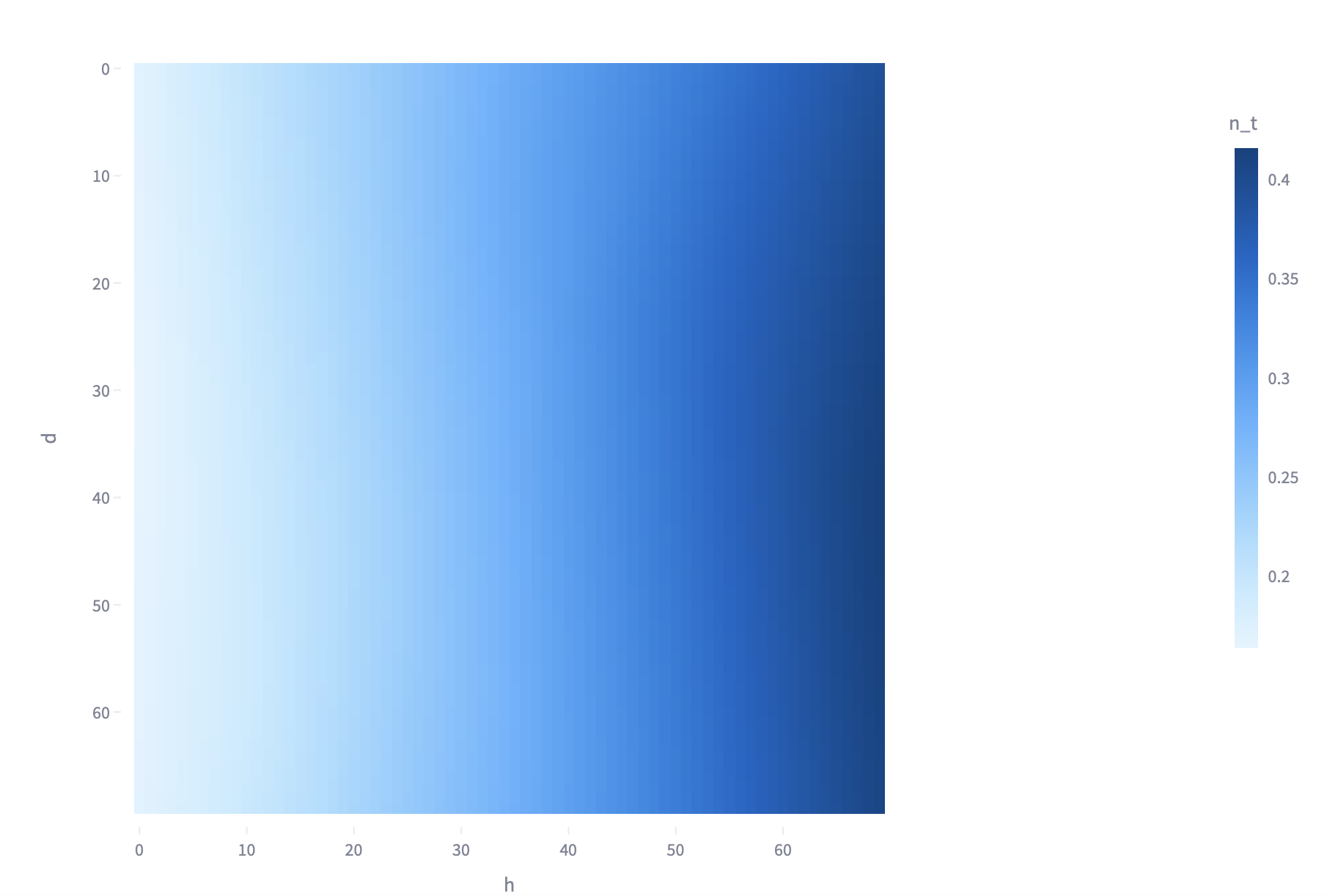

+

def forward(self,x):

|

| 14 |

+

|

| 15 |

+

c,b = self.weights

|

| 16 |

+

x1 = (x[0]/(c*x[1]))**0.5

|

| 17 |

+

return x1

|

| 18 |

+

|

| 19 |

+

class PINNhd_ma(nn.Module):

|

| 20 |

+

""" $h,d \mapsto m_a $

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

"""

|

| 24 |

+

def __init__(self):

|

| 25 |

+

super(PINNhd_ma,self).__init__()

|

| 26 |

+

weights = tensor([0.01])

|

| 27 |

+

self.weights = nn.Parameter(weights)

|

| 28 |

+

def forward(self,x):

|

| 29 |

+

c, = self.weights

|

| 30 |

+

x1 = c*x[0]*x[1]

|

| 31 |

+

return x1

|

| 32 |

+

|

| 33 |

+

class PINNT_ma(nn.Module):

|

| 34 |

+

"""$ m_a, U \mapsto T$

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

"""

|

| 38 |

+

def __init__(self):

|

| 39 |

+

super(PINNT_ma,self).__init__()

|

| 40 |

+

weights = tensor([0.01])

|

| 41 |

+

self.weights = nn.Parameter(weights)

|

| 42 |

+

def forward(self,x):

|

| 43 |

+

c, = self.weights

|

| 44 |

+

x1 = c*x[0]*x[1]**0.5

|

| 45 |

+

return x1

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

|

README.md

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: Hetfit

|

| 3 |

+

emoji: 📉

|

| 4 |

+

colorFrom: yellow

|

| 5 |

+

colorTo: blue

|

| 6 |

+

sdk: streamlit

|

| 7 |

+

sdk_version: 1.17.0

|

| 8 |

+

app_file: app.py

|

| 9 |

+

pinned: false

|

| 10 |

+

license: cc-by-nc-4.0

|

| 11 |

+

---

|

| 12 |

+

|

| 13 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

TDP.png

ADDED

|

TPU.png

ADDED

|

Tisp.png

ADDED

|

Unknown-3.jpg

ADDED

|

ann.png

ADDED

|

app.py

ADDED

|

@@ -0,0 +1,83 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

|

| 3 |

+

from nets.envs import SCI

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

st.set_page_config(

|

| 7 |

+

page_title="HET_sci",

|

| 8 |

+

menu_items={

|

| 9 |

+

'About':'https://advpropsys.github.io'

|

| 10 |

+

}

|

| 11 |

+

)

|

| 12 |

+

|

| 13 |

+

st.title('HETfit_scientific')

|

| 14 |

+

st.markdown("#### Imagine a package which was engineered primarly for data driven plasma physics devices design, mainly low power hall effect thrusters, yup that's it"

|

| 15 |

+

"\n### :orange[Don't be scared away though, it has much simpler interface than anything you ever used for such designs]")

|

| 16 |

+

st.markdown('### Main concepts:')

|

| 17 |

+

st.markdown( "- Each observational/design session is called an **environment**, for now it can be either RCI or SCI (Real or scaled interface)"

|

| 18 |

+

"\n In this overview we will only touch SCI, since RCI is using PINNs which are different topic"

|

| 19 |

+

"\n- You specify most of the run parameters on this object init, :orange[**including generation of new samples**] via GAN"

|

| 20 |

+

"\n- You may want to generate new features, do it !"

|

| 21 |

+

"\n- Want to select best features for more effctive work? Done!"

|

| 22 |

+

"\n- Compile environment with your model of choice, can be ***any*** torch model or sklearn one"

|

| 23 |

+

"\n- Train !"

|

| 24 |

+

"\n- Plot, inference, save, export to jit/onnx, measure performance - **they all are one liners** "

|

| 25 |

+

)

|

| 26 |

+

st.markdown('### tl;dr \n- Create environment'

|

| 27 |

+

'\n```run = SCI(*args,**kwargs)```'

|

| 28 |

+

'\n - Generate features ```run.feature_gen()``` '

|

| 29 |

+

'\n - Select features ```run.feature_importance()```'

|

| 30 |

+

'\n - Compile env ```run.compile()```'

|

| 31 |

+

'\n - Train model in env ```run.train()```'

|

| 32 |

+

'\n - Inference, plot, performance, ex. ```run.plot3d()```'

|

| 33 |

+

'\n #### And yes, it all will work even without any additional arguments from user besides column indexes'

|

| 34 |

+

)

|

| 35 |

+

st.write('Comparison with *arXiv:2206.04440v3*')

|

| 36 |

+

col1, col2 = st.columns(2)

|

| 37 |

+

col1.metric('Geometry accuracy on domain',value='83%',delta='15%')

|

| 38 |

+

col2.metric('$d \mapsto h$ prediction',value='98%',delta='14%')

|

| 39 |

+

|

| 40 |

+

st.header('Example:')

|

| 41 |

+

|

| 42 |

+

st.markdown('Remeber indexes and column names on this example: $P$ - 1, $d$ - 3, $h$ - 3, $m_a$ - 6,$T$ - 7')

|

| 43 |

+

st.code('run = SCI(*args,**kwargs)')

|

| 44 |

+

|

| 45 |

+

run = SCI()

|

| 46 |

+

st.code('run.feature_gen()')

|

| 47 |

+

run.feature_gen()

|

| 48 |

+

st.write('New features: (index-0:22 original samples, else is GAN generated)',run.df.iloc[1:,9:].astype(float))

|

| 49 |

+

st.write('Most of real dataset is from *doi:0.2514/1.B37424*, hence the results mostly agree with it in specific')

|

| 50 |

+

st.code('run.feature_importance(run.df.iloc[1:,1:7].astype(float),run.df.iloc[1:,7]) # Clear and easy example')

|

| 51 |

+

|

| 52 |

+

st.write(run.feature_importance(run.df.iloc[1:,1:6].astype(float),run.df.iloc[1:,6]))

|

| 53 |

+

st.markdown(' As we can see only $h$ and $d$ passed for $m_a$ model, not only that linear dependacy was proven experimantally, but now we got this from data driven source')

|

| 54 |

+

st.code('run.compile(idx=(1,3,7))')

|

| 55 |

+

run.compile(idx=(1,3,7))

|

| 56 |

+

st.code('run.train(epochs=10)')

|

| 57 |

+

if st.button('Start Training⏳',use_container_width=True):

|

| 58 |

+

run.train(epochs=10)

|

| 59 |

+

st.code('run.plot3d()')

|

| 60 |

+

st.write(run.plot3d())

|

| 61 |

+

st.code('run.performance()')

|

| 62 |

+

st.write(run.performance())

|

| 63 |

+

else:

|

| 64 |

+

st.markdown('#')

|

| 65 |

+

|

| 66 |

+

st.markdown('---\nTry it out yourself! Select a column from 1 to 10')

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

number = st.number_input('Here',min_value=1, max_value=10, step=1)

|

| 70 |

+

|

| 71 |

+

if number:

|

| 72 |

+

if st.button('Compile And Train💅',use_container_width=True):

|

| 73 |

+

st.code(f'run.compile(idx=(1,3,{number}))')

|

| 74 |

+

run.compile(idx=(1,3,number))

|

| 75 |

+

st.code('run.train(epochs=10)')

|

| 76 |

+

run.train(epochs=10)

|

| 77 |

+

st.code('run.plot3d()')

|

| 78 |

+

st.write(run.plot3d())

|

| 79 |

+

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

st.markdown('In this intro we covered simplest userflow while using HETFit package, resulted data can be used to leverage PINN and analytical models of Hall effect thrusters'

|

| 83 |

+

'\n #### :orange[To cite please contact author on https://github.com/advpropsys]')

|

bb.md

ADDED

|

@@ -0,0 +1,38 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<a id="nets.opti.blackbox"></a>

|

| 2 |

+

|

| 3 |

+

# :orange[Hyper Paramaters Optimization class]

|

| 4 |

+

## nets.opti.blackbox

|

| 5 |

+

|

| 6 |

+

<a id="nets.opti.blackbox.Hyper"></a>

|

| 7 |

+

|

| 8 |

+

### Hyper Objects

|

| 9 |

+

|

| 10 |

+

```python

|

| 11 |

+

class Hyper(SCI)

|

| 12 |

+

```

|

| 13 |

+

|

| 14 |

+

Hyper parameter tunning class. Allows to generate best NN architecture for task. Inputs are column indexes. idx[-1] is targeted value.

|

| 15 |

+

|

| 16 |

+

<a id="nets.opti.blackbox.Hyper.start_study"></a>

|

| 17 |

+

|

| 18 |

+

#### start\_study

|

| 19 |

+

|

| 20 |

+

```python

|

| 21 |

+

def start_study(n_trials: int = 100,

|

| 22 |

+

neptune_project: str = None,

|

| 23 |

+

neptune_api: str = None)

|

| 24 |

+

```

|

| 25 |

+

|

| 26 |

+

Starts study. Optionally provide your neptune repo and token for report generation.

|

| 27 |

+

|

| 28 |

+

**Arguments**:

|

| 29 |

+

|

| 30 |

+

- `n_trials` _int, optional_ - Number of iterations. Defaults to 100.

|

| 31 |

+

- `neptune_project` _str, optional_ - None

|

| 32 |

+

- neptune_api (str, optional):. Defaults to None.

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

**Returns**:

|

| 36 |

+

|

| 37 |

+

- `dict` - quick report of results

|

| 38 |

+

|

dH.png

ADDED

|

dT.png

ADDED

|

dashboard.png

ADDED

|

data/bound.pkl

ADDED

|

Binary file (34 kB). View file

|

|

|

data/dataset.csv

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Name,U,d,h,j,Isp,nu_t,T,m_a

|

| 2 |

+

SPT-20 [21],52.4,180,15.0,5.0,32.0,0.47,3.9,839

|

| 3 |

+

SPT-25 [22],134,180,20.0,5.0,10,0.59,5.5,948

|

| 4 |

+

HET-100 [23],174,300,23.5,5.5,14.5,0.50,6.8,1386

|

| 5 |

+

KHT-40 [24],187,325,31.0,9.0,25.5,0.69,10.3,1519

|

| 6 |

+

KHT-50 [24],193,250,42.0,8.0,25.0,0.88,11.6,1339

|

| 7 |

+

HEPS-200,195,250,42.5,8.5,25.0,0.88,11.2,1300

|

| 8 |

+

BHT-200 [2526],200,250,21.0,5.6,11.2,0.94,12.8,1390

|

| 9 |

+

KM-32 [27],215,250,32.0,7.0,16.0,1.00,12.2,1244

|

| 10 |

+

SPT-50M [28],245,200,39.0,11.0,25.0,1.50,16.0,1088

|

| 11 |

+

SPT-30 [23],258,250,24.0,6.0,11.0,0.98,13.2,1234

|

| 12 |

+

KM-37 [29],283,292,37.0,9.0,17.5,1.15,18.5,1640

|

| 13 |

+

CAM200 [3031],304,275,43.0,12.0,24,1.09,17.3,1587

|

| 14 |

+

SPT-50 [21],317,300,39.0,11.0,25.0,1.18,17.5,1746

|

| 15 |

+

A-3 [21],324,300,47.0,13.0,30.0,1.18,18.0,1821

|

| 16 |

+

HEPS-500,482,300,49.5,15.5,25.0,1.67,25.9,1587

|

| 17 |

+

BHT-600 [2632],615,300,56.0,16.0,32,2.60,39.1,1530

|

| 18 |

+

SPT-70 [33],660,300,56.0,14.0,25.0,2.56,40.0,1593

|

| 19 |

+

SPT-100 [934],1350,300,85.0,15.0,25.0,5.14,81.6,1540

|

| 20 |

+

UAH-78AM,520,260,78.0,20,40,2,30,1450

|

| 21 |

+

MaSMi40,330,300,40,6.28,12.56,1.5,13,1100

|

| 22 |

+

MaSMi60,700,250,60,9.42,19,2.56,30,1300

|

| 23 |

+

MaSMiDm,1000,500,67,10.5,21,3,53,1940

|

| 24 |

+

Music-si,140,288,18,2,6.5,0.44,4.2,850

|

data/dataset.pkl

ADDED

|

Binary file (106 kB). View file

|

|

|

data/new

ADDED

|

Binary file (84.1 kB). View file

|

|

|

data/test.pkl

ADDED

|

Binary file (84.2 kB). View file

|

|

|

disc.png

ADDED

|

docs/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

docs/main.html

ADDED

|

@@ -0,0 +1,106 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<!DOCTYPE html>

|

| 2 |

+

<html>

|

| 3 |

+

<head>

|

| 4 |

+

<meta http-equiv="content-type" content="text/html;charset=utf-8">

|

| 5 |

+

<title>main.py</title>

|

| 6 |

+

<link rel="stylesheet" href="pycco.css">

|

| 7 |

+

</head>

|

| 8 |

+

<body>

|

| 9 |

+

<div id='container'>

|

| 10 |

+

<div id="background"></div>

|

| 11 |

+

<div class='section'>

|

| 12 |

+

<div class='docs'><h1>main.py</h1></div>

|

| 13 |

+

</div>

|

| 14 |

+

<div class='clearall'>

|

| 15 |

+

<div class='section' id='section-0'>

|

| 16 |

+

<div class='docs'>

|

| 17 |

+

<div class='octowrap'>

|

| 18 |

+

<a class='octothorpe' href='#section-0'>#</a>

|

| 19 |

+

</div>

|

| 20 |

+

|

| 21 |

+

</div>

|

| 22 |

+

<div class='code'>

|

| 23 |

+

<div class="highlight"><pre><span></span><span class="kn">import</span> <span class="nn">streamlit</span> <span class="k">as</span> <span class="nn">st</span>

|

| 24 |

+

|

| 25 |

+

<span class="kn">from</span> <span class="nn">nets.envs</span> <span class="kn">import</span> <span class="n">SCI</span>

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

<span class="n">st</span><span class="o">.</span><span class="n">set_page_config</span><span class="p">(</span>

|

| 29 |

+

<span class="n">page_title</span><span class="o">=</span><span class="s2">"HET_sci"</span><span class="p">,</span>

|

| 30 |

+

<span class="n">menu_items</span><span class="o">=</span><span class="p">{</span>

|

| 31 |

+

<span class="s1">'About'</span><span class="p">:</span><span class="s1">'https://advpropsys.github.io'</span>

|

| 32 |

+

<span class="p">}</span>

|

| 33 |

+

<span class="p">)</span>

|

| 34 |

+

|

| 35 |

+

<span class="n">st</span><span class="o">.</span><span class="n">title</span><span class="p">(</span><span class="s1">'HETfit_scientific'</span><span class="p">)</span>

|

| 36 |

+

<span class="n">st</span><span class="o">.</span><span class="n">markdown</span><span class="p">(</span><span class="s2">"#### Imagine a package which was engineered primarly for data driven plasma physics devices design, mainly hall effect thrusters, yup that's it"</span>

|

| 37 |

+

<span class="s2">"</span><span class="se">\n</span><span class="s2">### :orange[Don't be scared away though, it has much simpler interface than anything you ever used for such designs]"</span><span class="p">)</span>

|

| 38 |

+

<span class="n">st</span><span class="o">.</span><span class="n">markdown</span><span class="p">(</span><span class="s1">'### Main concepts:'</span><span class="p">)</span>

|

| 39 |

+

<span class="n">st</span><span class="o">.</span><span class="n">markdown</span><span class="p">(</span> <span class="s2">"- Each observational/design session is called an **environment**, for now it can be either RCI or SCI (Real or scaled interface)"</span>

|

| 40 |

+

<span class="s2">"</span><span class="se">\n</span><span class="s2"> In this overview we will only touch SCI, since RCI is using PINNs which are different topic"</span>

|

| 41 |

+

<span class="s2">"</span><span class="se">\n</span><span class="s2">- You specify most of the run parameters on this object init, :orange[**including generation of new samples**] via GAN"</span>

|

| 42 |

+

<span class="s2">"</span><span class="se">\n</span><span class="s2">- You may want to generate new features, do it !"</span>

|

| 43 |

+

<span class="s2">"</span><span class="se">\n</span><span class="s2">- Want to select best features for more effctive work? Done!"</span>

|

| 44 |

+

<span class="s2">"</span><span class="se">\n</span><span class="s2">- Compile environment with your model of choice, can be ***any*** torch model or sklearn one"</span>

|

| 45 |

+

<span class="s2">"</span><span class="se">\n</span><span class="s2">- Train !"</span>

|

| 46 |

+

<span class="s2">"</span><span class="se">\n</span><span class="s2">- Plot, inference, save, export to jit/onnx, measure performance - **they all are one liners** "</span>

|

| 47 |

+

<span class="p">)</span>

|

| 48 |

+

<span class="n">st</span><span class="o">.</span><span class="n">markdown</span><span class="p">(</span><span class="s1">'### tl;dr </span><span class="se">\n</span><span class="s1">- Create environment'</span>

|

| 49 |

+

<span class="s1">'</span><span class="se">\n</span><span class="s1">```run = SCI(*args,**kwargs)```'</span>

|

| 50 |

+

<span class="s1">'</span><span class="se">\n</span><span class="s1"> - Generate features ```run.feature_gen()``` '</span>

|

| 51 |

+

<span class="s1">'</span><span class="se">\n</span><span class="s1"> - Select features ```run.feature_importance()```'</span>

|

| 52 |

+

<span class="s1">'</span><span class="se">\n</span><span class="s1"> - Compile env ```run.compile()```'</span>

|

| 53 |

+

<span class="s1">'</span><span class="se">\n</span><span class="s1"> - Train model in env ```run.train()```'</span>

|

| 54 |

+

<span class="s1">'</span><span class="se">\n</span><span class="s1"> - Inference, plot, performance, ex. ```run.plot3d()```'</span>

|

| 55 |

+

<span class="s1">'</span><span class="se">\n</span><span class="s1"> #### And yes, it all will work even without any additional arguments from user besides column indexes'</span>

|

| 56 |

+

<span class="p">)</span>

|

| 57 |

+

<span class="n">st</span><span class="o">.</span><span class="n">write</span><span class="p">(</span><span class="s1">'Comparison with *arXiv:2206.04440v3*'</span><span class="p">)</span>

|

| 58 |

+

<span class="n">col1</span><span class="p">,</span> <span class="n">col2</span> <span class="o">=</span> <span class="n">st</span><span class="o">.</span><span class="n">columns</span><span class="p">(</span><span class="mi">2</span><span class="p">)</span>

|

| 59 |

+

<span class="n">col1</span><span class="o">.</span><span class="n">metric</span><span class="p">(</span><span class="s1">'Geometry accuracy on domain'</span><span class="p">,</span><span class="n">value</span><span class="o">=</span><span class="s1">'83%'</span><span class="p">,</span><span class="n">delta</span><span class="o">=</span><span class="s1">'15%'</span><span class="p">)</span>

|

| 60 |

+

<span class="n">col2</span><span class="o">.</span><span class="n">metric</span><span class="p">(</span><span class="s1">'$d \mapsto h$ prediction'</span><span class="p">,</span><span class="n">value</span><span class="o">=</span><span class="s1">'98%'</span><span class="p">,</span><span class="n">delta</span><span class="o">=</span><span class="s1">'14%'</span><span class="p">)</span>

|

| 61 |

+

|

| 62 |

+

<span class="n">st</span><span class="o">.</span><span class="n">header</span><span class="p">(</span><span class="s1">'Example:'</span><span class="p">)</span>

|

| 63 |

+

|

| 64 |

+

<span class="n">st</span><span class="o">.</span><span class="n">markdown</span><span class="p">(</span><span class="s1">'Remeber indexes and column names on this example: $P$ - 1, $d$ - 3, $h$ - 3, $m_a$ - 6,$T$ - 7'</span><span class="p">)</span>

|

| 65 |

+

<span class="n">st</span><span class="o">.</span><span class="n">code</span><span class="p">(</span><span class="s1">'run = SCI(*args,**kwargs)'</span><span class="p">)</span>

|

| 66 |

+

|

| 67 |

+

<span class="n">run</span> <span class="o">=</span> <span class="n">SCI</span><span class="p">()</span>

|

| 68 |

+

<span class="n">st</span><span class="o">.</span><span class="n">code</span><span class="p">(</span><span class="s1">'run.feature_gen()'</span><span class="p">)</span>

|

| 69 |

+

<span class="n">run</span><span class="o">.</span><span class="n">feature_gen</span><span class="p">()</span>

|

| 70 |

+

<span class="n">st</span><span class="o">.</span><span class="n">write</span><span class="p">(</span><span class="s1">'New features: (index-0:22 original samples, else is GAN generated)'</span><span class="p">,</span><span class="n">run</span><span class="o">.</span><span class="n">df</span><span class="o">.</span><span class="n">iloc</span><span class="p">[</span><span class="mi">1</span><span class="p">:,</span><span class="mi">9</span><span class="p">:]</span><span class="o">.</span><span class="n">astype</span><span class="p">(</span><span class="nb">float</span><span class="p">))</span>

|

| 71 |

+

<span class="n">st</span><span class="o">.</span><span class="n">write</span><span class="p">(</span><span class="s1">'Most of real dataset is from *doi:0.2514/1.B37424*, hence the results mostly agree with it in specific'</span><span class="p">)</span>

|

| 72 |

+

<span class="n">st</span><span class="o">.</span><span class="n">code</span><span class="p">(</span><span class="s1">'run.feature_importance(run.df.iloc[1:,1:7].astype(float),run.df.iloc[1:,7]) # Clear and easy example'</span><span class="p">)</span>

|

| 73 |

+

|

| 74 |

+

<span class="n">st</span><span class="o">.</span><span class="n">write</span><span class="p">(</span><span class="n">run</span><span class="o">.</span><span class="n">feature_importance</span><span class="p">(</span><span class="n">run</span><span class="o">.</span><span class="n">df</span><span class="o">.</span><span class="n">iloc</span><span class="p">[</span><span class="mi">1</span><span class="p">:,</span><span class="mi">1</span><span class="p">:</span><span class="mi">6</span><span class="p">]</span><span class="o">.</span><span class="n">astype</span><span class="p">(</span><span class="nb">float</span><span class="p">),</span><span class="n">run</span><span class="o">.</span><span class="n">df</span><span class="o">.</span><span class="n">iloc</span><span class="p">[</span><span class="mi">1</span><span class="p">:,</span><span class="mi">6</span><span class="p">]))</span>

|

| 75 |

+

<span class="n">st</span><span class="o">.</span><span class="n">markdown</span><span class="p">(</span><span class="s1">' As we can see only $h$ and $d$ passed for $m_a$ model, not only that linear dependacy was proven experimantally, but now we got this from data driven source'</span><span class="p">)</span>

|

| 76 |

+

<span class="n">st</span><span class="o">.</span><span class="n">code</span><span class="p">(</span><span class="s1">'run.compile(idx=(1,3,7))'</span><span class="p">)</span>

|

| 77 |

+

<span class="n">run</span><span class="o">.</span><span class="n">compile</span><span class="p">(</span><span class="n">idx</span><span class="o">=</span><span class="p">(</span><span class="mi">1</span><span class="p">,</span><span class="mi">3</span><span class="p">,</span><span class="mi">7</span><span class="p">))</span>

|

| 78 |

+

<span class="n">st</span><span class="o">.</span><span class="n">code</span><span class="p">(</span><span class="s1">'run.train(epochs=10)'</span><span class="p">)</span>

|

| 79 |

+

<span class="n">run</span><span class="o">.</span><span class="n">train</span><span class="p">(</span><span class="n">epochs</span><span class="o">=</span><span class="mi">10</span><span class="p">)</span>

|

| 80 |

+

<span class="n">st</span><span class="o">.</span><span class="n">code</span><span class="p">(</span><span class="s1">'run.plot3d()'</span><span class="p">)</span>

|

| 81 |

+

<span class="n">st</span><span class="o">.</span><span class="n">write</span><span class="p">(</span><span class="n">run</span><span class="o">.</span><span class="n">plot3d</span><span class="p">())</span>

|

| 82 |

+

<span class="n">st</span><span class="o">.</span><span class="n">code</span><span class="p">(</span><span class="s1">'run.performance()'</span><span class="p">)</span>

|

| 83 |

+

<span class="n">st</span><span class="o">.</span><span class="n">write</span><span class="p">(</span><span class="n">run</span><span class="o">.</span><span class="n">performance</span><span class="p">())</span>

|

| 84 |

+

|

| 85 |

+

<span class="n">st</span><span class="o">.</span><span class="n">write</span><span class="p">(</span><span class="s1">'Try it out yourself! Select a column from 1 to 10'</span><span class="p">)</span>

|

| 86 |

+

<span class="n">number</span> <span class="o">=</span> <span class="n">st</span><span class="o">.</span><span class="n">number_input</span><span class="p">(</span><span class="s1">'Here'</span><span class="p">,</span><span class="n">min_value</span><span class="o">=</span><span class="mi">1</span><span class="p">,</span> <span class="n">max_value</span><span class="o">=</span><span class="mi">10</span><span class="p">,</span> <span class="n">step</span><span class="o">=</span><span class="mi">1</span><span class="p">)</span>

|

| 87 |

+

|

| 88 |

+

<span class="k">if</span> <span class="n">number</span><span class="p">:</span>

|

| 89 |

+

<span class="n">st</span><span class="o">.</span><span class="n">code</span><span class="p">(</span><span class="sa">f</span><span class="s1">'run.compile(idx=(1,3,</span><span class="si">{</span><span class="n">number</span><span class="si">}</span><span class="s1">))'</span><span class="p">)</span>

|

| 90 |

+

<span class="n">run</span><span class="o">.</span><span class="n">compile</span><span class="p">(</span><span class="n">idx</span><span class="o">=</span><span class="p">(</span><span class="mi">1</span><span class="p">,</span><span class="mi">3</span><span class="p">,</span><span class="n">number</span><span class="p">))</span>

|

| 91 |

+

<span class="n">st</span><span class="o">.</span><span class="n">code</span><span class="p">(</span><span class="s1">'run.train(epochs=10)'</span><span class="p">)</span>

|

| 92 |

+

<span class="n">run</span><span class="o">.</span><span class="n">train</span><span class="p">(</span><span class="n">epochs</span><span class="o">=</span><span class="mi">10</span><span class="p">)</span>

|

| 93 |

+

<span class="n">st</span><span class="o">.</span><span class="n">code</span><span class="p">(</span><span class="s1">'run.plot3d()'</span><span class="p">)</span>

|

| 94 |

+

<span class="n">st</span><span class="o">.</span><span class="n">write</span><span class="p">(</span><span class="n">run</span><span class="o">.</span><span class="n">plot3d</span><span class="p">())</span>

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

<span class="n">st</span><span class="o">.</span><span class="n">markdown</span><span class="p">(</span><span class="s1">'In this intro we covered simplest user flow while using HETFit package, resulted data can be used to leverage PINN and analytical models of Hall effect thrusters'</span>

|

| 99 |

+

<span class="s1">'</span><span class="se">\n</span><span class="s1"> #### :orange[To cite please contact author on https://github.com/advpropsys]'</span><span class="p">)</span>

|

| 100 |

+

|

| 101 |

+

</pre></div>

|

| 102 |

+

</div>

|

| 103 |

+

</div>

|

| 104 |

+

<div class='clearall'></div>

|

| 105 |

+

</div>

|

| 106 |

+

</body>

|

fig1.png

ADDED

|

gan.png

ADDED

|

gen.png

ADDED

|

geom.png

ADDED

|

graph.jpg

ADDED

|

intro.md

ADDED

|

@@ -0,0 +1,453 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# :orange[Abstract:]

|

| 2 |

+

Hall effect thrusters are one of the most versatile and

|

| 3 |

+

popular electric propulsion systems for space use. Industry trends

|

| 4 |

+

towards interplanetary missions arise advances in design development

|

| 5 |

+

of such propulsion systems. It is understood that correct sizing of

|

| 6 |

+

discharge channel in Hall effect thruster impact performance greatly.

|

| 7 |

+

Since the complete physics model of such propulsion system is not yet

|

| 8 |

+

optimized for fast computations and design iterations, most thrusters

|

| 9 |

+

are being designed using so-called scaling laws. But this work focuses

|

| 10 |

+

on rather novel approach, which is outlined less frequently than

|

| 11 |

+

ordinary scaling design approach in literature. Using deep machine

|

| 12 |

+

learning it is possible to create predictive performance model, which

|

| 13 |

+

can be used to effortlessly get design of required hall thruster with

|

| 14 |

+

required characteristics using way less computing power than design

|

| 15 |

+

from scratch and way more flexible than usual scaling approach.

|

| 16 |

+

:orange[author:] Korolev K.V [^1]

|

| 17 |

+

title: Hall effect thruster design via deep neural network for additive

|

| 18 |

+

manufacturing

|

| 19 |

+

|

| 20 |

+

# Nomenclature

|

| 21 |

+

|

| 22 |

+

<div class="longtable*" markdown="1">

|

| 23 |

+

|

| 24 |

+

$U_d$ = discharge voltage

|

| 25 |

+

$P$ = discharge power

|

| 26 |

+

$T$ = thrust

|

| 27 |

+

$\dot{m}_a$ = mass flow rate

|

| 28 |

+

$I_{sp}$ = specific impulse

|

| 29 |

+

$\eta_m$ = mass utilization efficiency

|

| 30 |

+

$\eta_a$ = anode efficiency

|

| 31 |

+

$j$ = $P/v$ \[power density\]

|

| 32 |

+

$v$ = discharge channel volume

|

| 33 |

+

$h, d, L$ = generic geometry parameters

|

| 34 |

+

$C_*$ = set of scaling coefficients

|

| 35 |

+

$g$ = free-fall acceleration

|

| 36 |

+

$M$ = ion mass

|

| 37 |

+

|

| 38 |

+

</div>

|

| 39 |

+

|

| 40 |

+

# Introduction

|

| 41 |

+

|

| 42 |

+

<span class="lettrine">T</span><span class="smallcaps">he</span>

|

| 43 |

+

application of deep learning is extremely diverse, but in this study it

|

| 44 |

+

focuses on case of hall effect thruster design. Hall effect thruster

|

| 45 |

+

(HET) is rather simple DC plasma acceleration device, due to complex and

|

| 46 |

+

non linear process physics we don’t have any full analytical performance

|

| 47 |

+

models yet. Though there are a lot of ways these systems are designed in

|

| 48 |

+

industry with great efficiencies, but in cost of multi-million research

|

| 49 |

+

budgets and time. This problem might be solved using neural network

|

| 50 |

+

design approach and few hardware iteration tweaks(Plyashkov et al.

|

| 51 |

+

2022-10-25).

|

| 52 |

+

|

| 53 |

+

Scaled thrusters tend to have good performance but this approach isn’t

|

| 54 |

+

that flexible for numerous reasons: first and foremost, due to large

|

| 55 |

+

deviations in all of the initial experimental values accuracy can be not

|

| 56 |

+

that good, secondly, it is hardly possible to design thruster with

|

| 57 |

+

different power density or $I_{sp}$ efficiently.

|

| 58 |

+

|

| 59 |

+

On the other hand, the neural network design approach has accuracy

|

| 60 |

+

advantage only on domain of the dataset(Plyashkov et al. 2022-10-25),

|

| 61 |

+

this limitations is easily compensated by ability to create relations

|

| 62 |

+

between multiple discharge and geometry parameters at once. Hence this

|

| 63 |

+

novel approach and scaling relations together could be an ultimate

|

| 64 |

+

endgame design tool for HET.

|

| 65 |

+

|

| 66 |

+

Note that neither of these models do not include cathode efficiencies

|

| 67 |

+

and performances. So as the neutral gas thrust components. Most

|

| 68 |

+

correlations in previous literature were made using assumption or

|

| 69 |

+

physics laws(Shagayda and Gorshkov 2013-03), in this paper the new

|

| 70 |

+

method based on feature generation, GAN dataset augmentation and ML

|

| 71 |

+

feature selection is suggested.

|

| 72 |

+

|

| 73 |

+

## Dataset enlargement using GAN

|

| 74 |

+

|

| 75 |

+

As we already have discussed, the data which is available is not enough

|

| 76 |

+

for training NN or most ML algorithms, so I suggest using Generative

|

| 77 |

+

Adversarial Network to generate more similar points. Generative model

|

| 78 |

+

trains two different models - generator and discriminator. Generator

|

| 79 |

+

learns how to generate new points which are classified by discriminator

|

| 80 |

+

as similar to real dataset. Of course it is very understandable that

|

| 81 |

+

model needs to be precise enough not to overfit on data or create new

|

| 82 |

+

unknown correlations. Model was checked via Mean Absolute Percentage

|

| 83 |

+

Error (MAPE) and physical boundary conditions. After assembling most

|

| 84 |

+

promising architecture, the model was able to generate fake points with

|

| 85 |

+

MAPE of $~4.7\%$. We need to measure MAPE to be sure point lie on same

|

| 86 |

+

domain as original dataset, as in this work we are interested in

|

| 87 |

+

sub-kilowatt thrusters. After model generated new points they were check

|

| 88 |

+

to fit in physical boundaries of scaled values (for example thrust

|

| 89 |

+

couldn’t be more than 2, efficiency more than 1.4 and so on, data was

|

| 90 |

+

scaled on original dataset to retain quality), only 0.02% of points were

|

| 91 |

+

found to be outliers. The GAN architecture and dataset sample is

|

| 92 |

+

provided as follows.

|

| 93 |

+

|

| 94 |

+

<!--

|

| 95 |

+

-->

|

| 96 |

+

|

| 97 |

+

# General Relations

|

| 98 |

+

|

| 99 |

+

As we will use dataset of only low power hall thrusters, we can just

|

| 100 |

+

ignore derivation of any non-linear equations and relations and use

|

| 101 |

+

traditional approach here. Let’s define some parameters of anode:

|

| 102 |

+

$$\alpha = \frac{\dot{m}\beta}{{\dot{m}_a}},$$

|

| 103 |

+

Where $\alpha$ is anode

|

| 104 |

+

parameter of $\beta$ thruster parameter. This is selected because this

|

| 105 |

+

way cathode and other losses wont be included in the model. One of key

|

| 106 |

+

differences in this approach is fitting only best and most appropriate

|

| 107 |

+

data, thus we will eliminate some variance in scaling laws. Though due

|

| 108 |

+

to machine learning methods, we would need a lot of information which is

|

| 109 |

+

simply not available in those volumes. So some simplifications and

|

| 110 |

+

assumptions could be made. Firstly, as it was already said, we don’t

|

| 111 |

+

include neutralizer efficiency in the model. Secondly, the model would

|

| 112 |

+

be correct on very specific domain, defined by dataset, many parameters

|

| 113 |

+

like anode power and $I_{sp}$ still are using semi-empirical modelling

|

| 114 |

+

approach. The results we are looking for are outputs of machine learning

|

| 115 |

+

algorithm: specific impulse, thrust, efficiency, optimal mass flow rate,

|

| 116 |

+

power density. Function of input is solely dependant on power and

|

| 117 |

+

voltage range. For the matter of topic let’s introduce semi-empirical

|

| 118 |

+

equations which are used for scaling current thrusters.

|

| 119 |

+

|

| 120 |

+

<div class="longtable*" markdown="2">

|

| 121 |

+

|

| 122 |

+

$$h=C_hd$$

|

| 123 |

+

|

| 124 |

+

$$\dot{m_a} = C_m hd$$

|

| 125 |

+

|

| 126 |

+

$$P_d=C_pU_dd^2$$

|

| 127 |

+

|

| 128 |

+

$$T=C_t\dot{m_a}\sqrt{U_d}$$

|

| 129 |

+

|

| 130 |

+

$$I_{spa}=\frac{T}{\dot{m_a} g}$$

|

| 131 |

+

|

| 132 |

+

$$\eta_a=\frac{T}{2\dot{m_a}P_d}$$

|

| 133 |

+

|

| 134 |

+

</div>

|

| 135 |

+

|

| 136 |

+

Where $C_x$ is scaling coefficient obtained from analytical modelling,

|

| 137 |

+

which makes equations linear. Generally it has 95% prediction band but

|

| 138 |

+

as was said earlier this linearity is what gives problems to current

|

| 139 |

+

thrusters designs (high mass, same power density, average performance).

|

| 140 |

+

The original dataset is

|

| 141 |

+

|

| 142 |

+

| | | | | | | | | |

|

| 143 |

+

|:---------|:---------|:-------|:------|:------|:------|:-------------|:-----|:----------|

|

| 144 |

+

| Thruster | Power, W | U_d, V | d, mm | h, mm | L, mm | m_a,.g/s, | T, N | I\_spa, s |

|

| 145 |

+

| SPT-20 | 52.4 | 180 | 15.0 | 5.0 | 32.0 | 0.47 | 3.9 | 839 |

|

| 146 |

+

| SPT-25 | 134 | 180 | 20.0 | 5.0 | 10 | 0.59 | 5.5 | 948 |

|

| 147 |

+

| Music-si | 140 | 288 | 18 | 2 | 6.5 | 0.44 | 4.2 | 850 |

|

| 148 |

+

| HET-100 | 174 | 300 | 23.5 | 5.5 | 14.5 | 0.50 | 6.8 | 1386 |

|

| 149 |

+

| KHT-40 | 187 | 325 | 31.0 | 9.0 | 25.5 | 0.69 | 10.3 | 1519 |

|

| 150 |

+

| KHT-50 | 193 | 250 | 42.0 | 8.0 | 25.0 | 0.88 | 11.6 | 1339 |

|

| 151 |

+

| HEPS-200 | 195 | 250 | 42.5 | 8.5 | 25.0 | 0.88 | 11.2 | 1300 |

|

| 152 |

+

| BHT-200 | 200 | 250 | 21.0 | 5.6 | 11.2 | 0.94 | 12.8 | 1390 |

|

| 153 |

+

| KM-32 | 215 | 250 | 32.0 | 7.0 | 16.0 | 1.00 | 12.2 | 1244 |

|

| 154 |

+

| ... | | | | | | | | |

|

| 155 |

+

| HEPS-500 | 482 | 300 | 49.5 | 15.5 | 25.0 | 1.67 | 25.9 | 1587 |

|

| 156 |

+

| UAH-78AM | 520 | 260 | 78.0 | 20 | 40 | 2 | 30 | 1450 |

|

| 157 |

+

| BHT-600 | 615 | 300 | 56.0 | 16.0 | 32 | 2.60 | 39.1 | 1530 |

|

| 158 |

+

| SPT-70 | 660 | 300 | 56.0 | 14.0 | 25.0 | 2.56 | 40.0 | 1593 |

|

| 159 |

+

| MaSMi60 | 700 | 250 | 60 | 9.42 | 19 | 2.56 | 30 | 1300 |

|

| 160 |

+

| MaSMiDm | 1000 | 500 | 67 | 10.5 | 21 | 3 | 53 | 1940 |

|

| 161 |

+

| SPT-100 | 1350 | 300 | 85.0 | 15.0 | 25.0 | 5.14 | 81.6 | 1540 |

|

| 162 |

+

|

| 163 |

+

Hosting only 24 entries in total. The references are as follows(Beal et

|

| 164 |

+

al. 2004-11)(Belikov et al. 2001-07-08)(Kronhaus et al. 2013-07)(Misuri

|

| 165 |

+

and Andrenucci 2008-07-21)(Lee et al. 2019-11)

|

| 166 |

+

|

| 167 |

+

In the next section the used neural networks architectures will be

|

| 168 |

+

discussed.

|

| 169 |

+

|

| 170 |

+

# Data driven HET designs

|

| 171 |

+

|

| 172 |

+

Neural networks are a type of machine learning algorithm that is often

|

| 173 |

+

used in the field of artificial intelligence. They are mathematical

|

| 174 |

+

models that can be trained to recognize patterns within large datasets.

|

| 175 |

+

The architecture of GAN’s generator was already shown. In this section

|

| 176 |

+

we will focus on fully connected networks, which are most popular for

|

| 177 |

+

type for these tasks. HETFit code leverages dynamic architecture

|

| 178 |

+

generation of these FcNN’s which is done via meta learning algorithm

|

| 179 |

+

Tree-structured Parzen Estimator for every data input user selects. This

|

| 180 |

+

code uses state-of-art implementation made by OPTUNA. The dynamically

|

| 181 |

+

suggested architecture has 2 to 6 layers from 4 to 128 nodes on each

|

| 182 |

+

with SELU, Tanh or ReLU activations and most optimal optimizer. The code

|

| 183 |

+

user interface is as follows: 1. Specify working environment 2. Load or

|

| 184 |

+

generate data 3. Tune the architecture 4. Train and get robust scaling

|

| 185 |

+

models

|

| 186 |

+

|

| 187 |

+

## FNN

|

| 188 |

+

|

| 189 |

+

All of Fully connected neural networks are implemented in PyTorch as it

|

| 190 |

+

the most powerful ML/AI library for experiments. When the network

|

| 191 |

+

architecture is generated, all of networks have similar training loops

|

| 192 |

+

as they use gradient descend algorithm : Loss function:

|

| 193 |

+

$$L(w, b) \equiv \frac{1}{2 n} \sum_x\|y(x)-a\|^2$$ This one is mean

|

| 194 |

+

square error (MSE) error function most commonly used in FNNs. Next we

|

| 195 |

+

iterate while updating weights for a number of specified epochs this

|

| 196 |

+

way. Loop for number of epochs:

|

| 197 |

+

|

| 198 |

+

\- Get predictions: $\hat{y}$

|

| 199 |

+

|

| 200 |

+

\- Compute loss: $\mathscr{L}(w, b)$

|

| 201 |

+

|

| 202 |

+

\- Make backward pass

|

| 203 |

+

|

| 204 |

+

\- Update optimizer

|

| 205 |

+

|

| 206 |

+

It can be mentioned that dataset of electric propulsion is extremely

|

| 207 |

+

complex due to large deviations in data. Thanks to adavnces in data

|

| 208 |

+

science and ML it is possible to work with it.

|

| 209 |

+

|

| 210 |

+

This way we assembled dataset on our ROI domain of $P$\<1000 $W$ input

|

| 211 |

+

power and 200-500 $V$ range. Sadly one of limitations of such model is

|

| 212 |

+

disability to go beyond actual database limit while not sacrificing

|

| 213 |

+

performance and accuracy.

|

| 214 |

+

|

| 215 |

+

## Physics Informed Neural Networks

|

| 216 |

+

|

| 217 |

+

For working with unscaled data PINN’s were introduced, they are using

|

| 218 |

+

equations 2-7 to generate $C_x$ coefficients. Yes, it was said earlier

|

| 219 |

+

that this method lacks ability to generate better performing HETs, but

|

| 220 |

+

as we have generated larger dataset on same domain as Lee et al.

|

| 221 |

+

(2019-11) it is important to control that our dataset is still the same

|

| 222 |

+

quality as original. Using above mentioned PINN’s it was possible to fit

|

| 223 |

+

coefficients and they showed only slight divergence in values of few %

|

| 224 |

+

which is acceptable.

|

| 225 |

+

|

| 226 |

+

## ML approach notes

|

| 227 |

+

|

| 228 |

+

We already have discussed how HETFit code works and results it can

|

| 229 |

+

generate, the overiew is going to be given in next section. But here i

|

| 230 |

+

want to warn that this work is highly experimental and you should always

|

| 231 |

+

take ML approaches with a grain of salt, as some plasma discharge

|

| 232 |

+

physics in HET is yet to be understood, data driven way may have some

|

| 233 |

+

errors in predictions on specific bands. Few notes on design tool I have

|

| 234 |

+

developed in this work: it is meant to be used by people with little to

|

| 235 |

+

no experience in ML field but those who wants to quickly analyze their

|

| 236 |

+

designs or create baseline one for simulations. One can even use this

|

| 237 |

+

tool for general tabular data as it has mostly no limits whatsoever to

|

| 238 |

+

input data.

|

| 239 |

+

|

| 240 |

+

## Two input variables prediction

|

| 241 |

+

|

| 242 |

+

One of main characteristics for any type of thruster is efficiency, in

|

| 243 |

+

this work I researched dependency of multiple input values to $\eta_t$.

|

| 244 |

+

Results are as follows in form of predicted matrix visualisations.

|

| 245 |

+

Figure 3 takes into account all previous ones in the same time, once

|

| 246 |

+

again it would be way harder to do without ML.

|

| 247 |

+

|

| 248 |

+

|

| 249 |

+

|

| 250 |

+

# Results discussion

|

| 251 |

+

|

| 252 |

+

Let’s compare predictions of semi empirical approach(Lee et al.

|

| 253 |

+

2019-11), approach in paper(Plyashkov et al. 2022-10-25), and finally

|

| 254 |

+

ours. Worth to mention that current approach is easiest to redesign from

|

| 255 |

+

scratch.

|

| 256 |

+

|

| 257 |

+

## NN architecture generation algorithm

|

| 258 |

+

|

| 259 |

+

As with 50 iterations, previously discussed meta learning model is able

|

| 260 |

+

to create architecture with score of 0.9+ in matter of seconds. HETFit

|

| 261 |

+

allows logging into neptune.ai environment for full control over

|

| 262 |

+

simulations. Example trail run looks like that.

|

| 263 |

+

|

| 264 |

+

|

| 265 |

+

|

| 266 |

+

## Power density and magnetic flux dependence

|

| 267 |

+

|

| 268 |

+

Neither of the models currently support taking magnetic flux in account

|

| 269 |

+

besides general physics relations, but we are planning on updating the

|

| 270 |

+

model in next follow up paper. For now $\vec{B}$ relation to power

|

| 271 |

+

remains unresolved to ML approach but the magnetic field distribution on

|

| 272 |

+

z axis is computable and looks like that for magnetically shielded

|

| 273 |

+

thrusters:

|

| 274 |

+

|

| 275 |

+

|

| 276 |

+

|

| 277 |

+

## Dependency of T on d,P

|

| 278 |

+

|

| 279 |

+

Following graph is describing Thrust as function of channel diameter and

|

| 280 |

+

width, where hue map is thrust. It is well known dependency and it has

|

| 281 |

+

few around 95% prediction band (Lee et al. 2019-11)

|

| 282 |

+

|

| 283 |

+

|

| 284 |

+

|

| 285 |

+

## Dependency of T on P,U

|

| 286 |

+

|

| 287 |

+

|

| 288 |

+

|

| 289 |

+

## Dependency of T on $m_a$,P

|

| 290 |

+

|

| 291 |

+

Compared to(Shagayda and Gorshkov 2013-03) The model accounts for more

|

| 292 |

+

parameters than linear relation. So such method proves to be more

|

| 293 |

+

precise on specified domain than semi empirical linear relations.

|

| 294 |

+

|

| 295 |

+

|

| 296 |

+

|

| 297 |

+

## Dependency of $I_{sp}$ on d,h

|

| 298 |

+

|

| 299 |

+

|

| 300 |

+

|

| 301 |

+

We generated many models so far, but using ML we can make single model

|

| 302 |

+